背景

从Fedora官网下载了Fedora39,并安装在win11的Hyper-V中,网络设置选择默认的Default Switch,我们称其为vm。

vm的ip为172.24.133.231。从windows host中Ping 172.24.133.231,可以ping通,

windows host中ssh 172.24.133.231,可以正常连接

PS C:\Users\14190> ping 172.24.133.231

Pinging 172.24.133.231 with 32 bytes of data:

Reply from 172.24.133.231: bytes=32 time<1ms TTL=64

Reply from 172.24.133.231: bytes=32 time<1ms TTL=64

Reply from 172.24.133.231: bytes=32 time<1ms TTL=64

Ping statistics for 172.24.133.231:

Packets: Sent = 3, Received = 3, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 0ms, Maximum = 0ms, Average = 0ms

Control-C

PS C:\Users\14190> ssh 172.24.133.231

The authenticity of host '172.24.133.231 (172.24.133.231)' can't be established.

ED25519 key fingerprint is SHA256:nmlu5BX1ZYHwq47v8o0bsIyeQTssmT9oKgCNuu/fKM8.

This host key is known by the following other names/addresses:

C:\Users\14190/.ssh/known_hosts:5: 172.22.34.185

Are you sure you want to continue connecting (yes/no/[fingerprint])?

在vm上使用python3 -m http.server 9999启动服务,

root@localhost:~# python3 -m http.server 9999

Serving HTTP on 0.0.0.0 port 9999 (http://0.0.0.0:9999/) ...

在windows host上 curl 172.24.133.231:9999 无响应

PS C:\Users\14190> curl -v 172.24.133.231:9999

VERBOSE: GET with 0-byte payload

curl : Unable to connect to the remote server

At line:1 char:1

+ curl -v 172.24.133.231:9999

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebRequest], WebException

+ FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequestCommand

在vm中,curl 172.24.133.231:9999 同样timeout

root@localhost:~# curl -v http://172.24.133.231:9999

* processing: http://172.24.133.231:9999

试试监听到localhost看看

root@localhost:~# python3 -m http.server 9999 --bind 127.0.0.1

Serving HTTP on 127.0.0.1 port 9999 (http://127.0.0.1:9999/) ...

同样无响应

root@localhost:~# curl -v http://127.0.0.1:9999

* processing: http://127.0.0.1:9999

排查过程

这里我们安装并启动nginx,排除服务本身的问题

呃,nginx可以正常访问…

root@localhost:~# curl -v http://127.0.0.1

* processing: http://127.0.0.1

* Trying 127.0.0.1:80...

* Connected to 127.0.0.1 (127.0.0.1) port 80

> GET / HTTP/1.1

> Host: 127.0.0.1

> User-Agent: curl/8.2.1

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.24.0

< Date: Sat, 20 Apr 2024 15:46:09 GMT

< Content-Type: text/html

< Content-Length: 8474

< Last-Modified: Mon, 20 Feb 2023 17:42:39 GMT

< Connection: keep-alive

< ETag: "63f3b10f-211a"

试试通过ip访问,也能正常访问…

root@localhost:~# curl -v http://172.24.133.231

* processing: http://172.24.133.231

* Trying 172.24.133.231:80...

* Connected to 172.24.133.231 (172.24.133.231) port 80

> GET / HTTP/1.1

> Host: 172.24.133.231

> User-Agent: curl/8.2.1

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.24.0

< Date: Sat, 20 Apr 2024 15:47:09 GMT

< Content-Type: text/html

< Content-Length: 8474

< Last-Modified: Mon, 20 Feb 2023 17:42:39 GMT

< Connection: keep-alive

< ETag: "63f3b10f-211a"

< Accept-Ranges: bytes

如果我们让nginx监听到其他端口呢?(修改为一个非常见端口,8001)

#/etc/nginx/nginx.conf

server {

listen 8001;

listen [::]:8001;

....

}

root@localhost:~# netstat -antp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:5355 0.0.0.0:* LISTEN 830/systemd-resolve

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 830/systemd-resolve

tcp 0 0 0.0.0.0:8001 0.0.0.0:* LISTEN 332276/nginx: maste

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 963/sshd: /usr/sbin

tcp 0 0 127.0.0.54:53 0.0.0.0:* LISTEN 830/systemd-resolve

tcp 0 0 127.0.0.1:9999 0.0.0.0:* LISTEN 328232/python3

tcp 0 0 172.24.133.231:22 172.24.128.1:3504 ESTABLISHED 14243/sshd: root [p

tcp 0 248 172.24.133.231:22 172.24.128.1:8762 ESTABLISHED 23350/sshd: root [p

在vm呢,也还是一样可以访问

root@localhost:~# curl -v http://127.0.0.1:8001

* processing: http://127.0.0.1:8001

* Trying 127.0.0.1:8001...

* Connected to 127.0.0.1 (127.0.0.1) port 8001

> GET / HTTP/1.1

> Host: 127.0.0.1:8001

> User-Agent: curl/8.2.1

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.24.0

< Date: Sat, 20 Apr 2024 15:51:15 GMT

< Content-Type: text/html

< Content-Length: 8474

< Last-Modified: Mon, 20 Feb 2023 17:42:39 GMT

< Connection: keep-alive

< ETag: "63f3b10f-211a"

root@localhost:~# curl -v http://172.24.133.231:8001

* processing: http://172.24.133.231:8001

* Trying 172.24.133.231:8001...

* Connected to 172.24.133.231 (172.24.133.231) port 8001

> GET / HTTP/1.1

> Host: 172.24.133.231:8001

> User-Agent: curl/8.2.1

> Accept: */*

>

< HTTP/1.1 200 OK

< Server: nginx/1.24.0

< Date: Sat, 20 Apr 2024 15:51:19 GMT

< Content-Type: text/html

< Content-Length: 8474

< Last-Modified: Mon, 20 Feb 2023 17:42:39 GMT

< Connection: keep-alive

< ETag: "63f3b10f-211a"

< Accept-Ranges: bytes

<

<!doctype html>

神奇的是,刚刚无法访问的9999端口,经过nginx重启后也能访问了…(当作疑问,后续复现这个问题再解决吧…)

root@localhost:~# curl -v http://127.0.0.1:9999

* processing: http://127.0.0.1:9999

* Trying 127.0.0.1:9999...

* Connected to 127.0.0.1 (127.0.0.1) port 9999

> GET / HTTP/1.1

> Host: 127.0.0.1:9999

> User-Agent: curl/8.2.1

> Accept: */*

>

* HTTP 1.0, assume close after body

< HTTP/1.0 200 OK

< Server: SimpleHTTP/0.6 Python/3.12.2

< Date: Sat, 20 Apr 2024 15:54:07 GMT

< Content-type: text/html; charset=utf-8

< Content-Length: 1279

<

root@localhost:~# curl -v http://172.24.133.231:9999

* processing: http://172.24.133.231:9999

* Trying 172.24.133.231:9999...

* Connected to 172.24.133.231 (172.24.133.231) port 9999

> GET / HTTP/1.1

> Host: 172.24.133.231:9999

> User-Agent: curl/8.2.1

> Accept: */*

>

* HTTP 1.0, assume close after body

< HTTP/1.0 200 OK

< Server: SimpleHTTP/0.6 Python/3.12.2

< Date: Sat, 20 Apr 2024 15:55:46 GMT

< Content-type: text/html; charset=utf-8

< Content-Length: 1279

<

这个问题,我们先不管,我们继续尝试从windows host访问我们的nginx 8001呢

PS C:\Users\14190> curl -v http://172.24.133.231:8001

VERBOSE: GET with 0-byte payload

curl : Unable to connect to the remote server

At line:1 char:1

+ curl -v http://172.24.133.231:8001

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebRequest], WebException

+ FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequestCommand

一样,还是超时

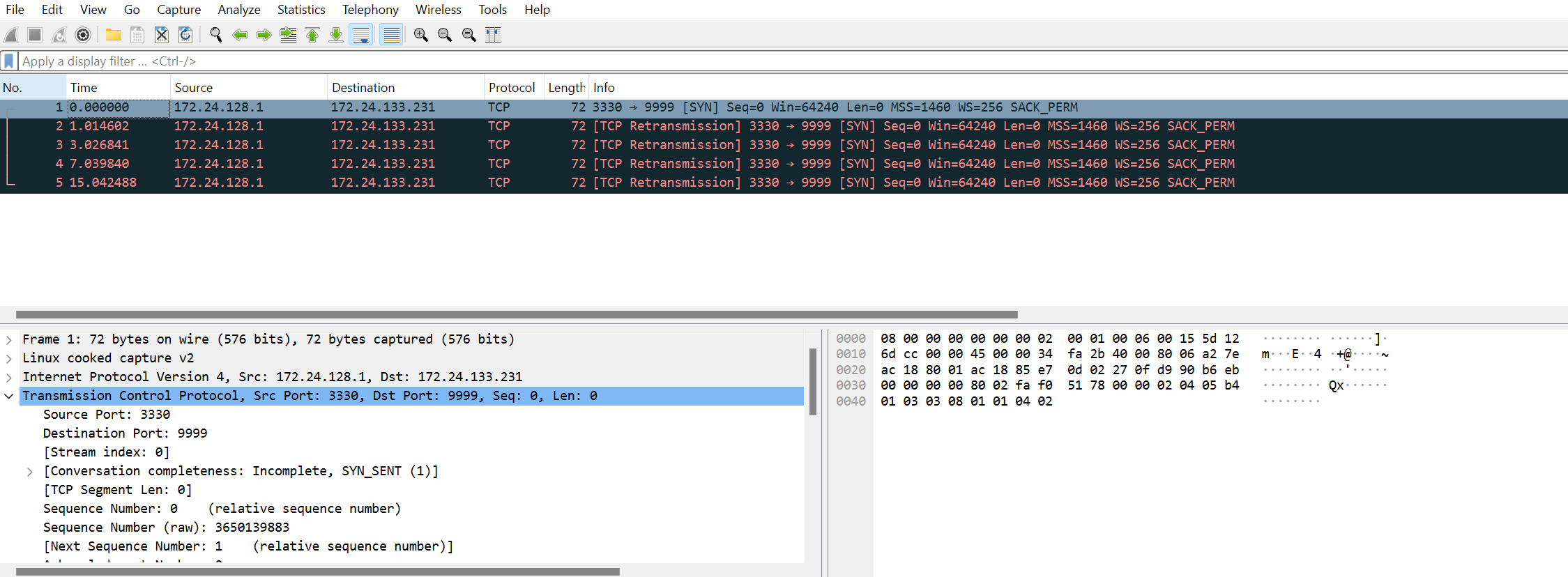

这时,我们需要解决的第一个疑惑是,包是否有从host—>vm,有没有可能在中间链路丢包呢,我们在vm的eth0(绑定ip的网口)进行抓包

tcpdump -i any -s0 -nnn -vvv port 9999 -w server.pcap

可以看到,host—>vm的syn包,但是vm并没有回包…

这个时候,有两种场景可能发生

- vm没收到包,经过抓包点后,到后续送往应用程序的链路上,被丢了包,导致vm的port 9999没收到包

- vm收到了包,但是回包在抓包点前就被丢包了

这里,我们先看第二点,如何确认vm收到了包,但是回包在抓包点前就被丢了…

可以给应用加调试手段,在收到包的时候,打印下日志。也可以通过iptables在linux的收、回、转发包方向上均打印日志

iptables --table raw -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables raw PREROUTING SEEN"

iptables --table mangle -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle PREROUTING SEEN"

iptables --table nat -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables nat PREROUTING SEEN"

# local input

iptables --table mangle -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle INPUT SEEN"

iptables --table nat -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables nat INPUT SEEN"

iptables --table filter -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables filter INPUT SEEN"

# forward

iptables --table mangle -A FORWARD -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle FORWARD SEEN"

iptables --table filter -A FORWARD -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables filter FORWARD SEEN"

#output

iptables --table filter -A OUTPUT -p tcp --sport 9999 -j LOG --log-prefix "iptables filter OUTPUT SEEN"

基于此,我们确认了应用层没有主动回包(也就不存在回包后,在抓包点前并丢了的情况)

然后我们猜测应该是在内核层处理时丢包的,搜了下How to retrieve packet drop reasons in the Linux kernel | Red Hat Developer,

Redhat介绍了perf,dropwatch这两个工具。

我们这里以dropwatch为例,具体使用方法参见Using dropwatch to monitor packet drops (netbeez.net)

#安装

root@localhost:/boot# dnf install dropwatch -y

root@localhost:/boot# dropwatch -lkas

Initializing kallsyms db

dropwatch> help

Command Syntax:

exit - Quit dropwatch

help - Display this message

set:

alertlimit <number> - capture only this many alert packets

alertmode <mode> - set mode to "summary" or "packet"

trunc <len> - truncate packets to this length. Only applicable when "alertmode" is set to "packet"

queue <len> - queue up to this many packets in the kernel. Only applicable when "alertmode" is set to "packet"

sw <true | false> - monitor software drops

hw <true | false> - monitor hardware drops

start - start capture

stop - stop capture

show - show existing configuration

stats - show statistics

dropwatch> set alertmode packet

Setting alert mode

Alert mode successfully set

dropwatch> start

Enabling monitoring...

Kernel monitoring activated.

Issue Ctrl-C to stop monitoring

drop at: nft_do_chain+0x40c/0x620 [nf_tables] (0xffffffffc0a514fc)

origin: software

input port ifindex: 2

timestamp: Sun Apr 21 10:19:48 2024 990406165 nsec

protocol: 0x800

length: 66

original length: 66

drop reason: NETFILTER_DROP

我们这里看到

drop at: nft_do_chain+0x40c/0x620 [nf_tables] (0xffffffffc0a514fc)

origin: software

input port ifindex: 2

timestamp: Sun Apr 21 10:19:48 2024 990406165 nsec

protocol: 0x800

length: 66

original length: 66

drop reason: NETFILTER_DROP

意思是在nft_do_chain+0x40c/0x620这个位置丢包,而这对应于内核的什么位置,我也不知道…

但我们已经知道了应该是nf_tables导致的丢包,nftables是和iptables类似的防火墙框架,是iptables的升级版,它们都是基于netfilter构建的。

centos/fedora自带的firewalld就是基于nftables构建的

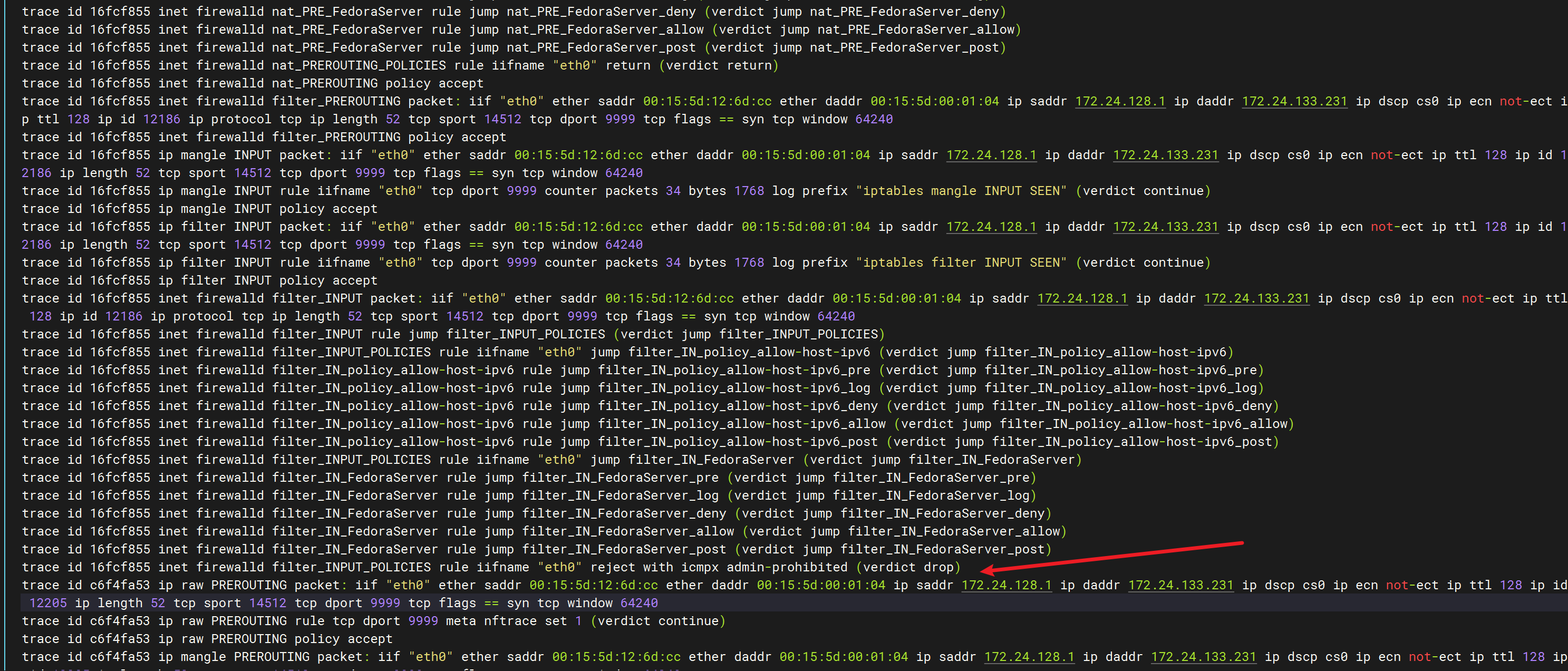

ok,到目前为止,我们知道了应该是nftables导致的丢包…,那我们接下来看看如何监控哪里丢包

根据官方文档提供的trace方法Ruleset debug/tracing – nftables wiki

我们可以做到监控一个包在nftables中如何运行…

root@localhost:/boot# nft add rule ip raw PREROUTING tcp dport 9999 meta nftrace set 1

输出如下

root@localhost:/boot# nft monitor trace

trace id 16fcf855 ip raw PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 ip raw PREROUTING rule tcp dport 9999 meta nftrace set 1 (verdict continue)

trace id 16fcf855 ip raw PREROUTING policy accept

trace id 16fcf855 ip mangle PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 ip mangle PREROUTING rule iifname "eth0" tcp dport 9999 counter packets 34 bytes 1768 log prefix "iptables mangle PREROUTING SE" (verdict continue)

trace id 16fcf855 ip mangle PREROUTING policy accept

trace id 16fcf855 inet firewalld mangle_PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip protocol tcp ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 inet firewalld mangle_PREROUTING rule jump mangle_PREROUTING_POLICIES (verdict jump mangle_PREROUTING_POLICIES)

trace id 16fcf855 inet firewalld mangle_PREROUTING_POLICIES rule iifname "eth0" jump mangle_PRE_policy_allow-host-ipv6 (verdict jump mangle_PRE_policy_allow-host-ipv6)

trace id 16fcf855 inet firewalld mangle_PRE_policy_allow-host-ipv6 rule jump mangle_PRE_policy_allow-host-ipv6_pre (verdict jump mangle_PRE_policy_allow-host-ipv6_pre)

trace id 16fcf855 inet firewalld mangle_PRE_policy_allow-host-ipv6 rule jump mangle_PRE_policy_allow-host-ipv6_log (verdict jump mangle_PRE_policy_allow-host-ipv6_log)

trace id 16fcf855 inet firewalld mangle_PRE_policy_allow-host-ipv6 rule jump mangle_PRE_policy_allow-host-ipv6_deny (verdict jump mangle_PRE_policy_allow-host-ipv6_deny)

trace id 16fcf855 inet firewalld mangle_PRE_policy_allow-host-ipv6 rule jump mangle_PRE_policy_allow-host-ipv6_allow (verdict jump mangle_PRE_policy_allow-host-ipv6_allow)

trace id 16fcf855 inet firewalld mangle_PRE_policy_allow-host-ipv6 rule jump mangle_PRE_policy_allow-host-ipv6_post (verdict jump mangle_PRE_policy_allow-host-ipv6_post)

trace id 16fcf855 inet firewalld mangle_PREROUTING_POLICIES rule iifname "eth0" jump mangle_PRE_FedoraServer (verdict jump mangle_PRE_FedoraServer)

trace id 16fcf855 inet firewalld mangle_PRE_FedoraServer rule jump mangle_PRE_FedoraServer_pre (verdict jump mangle_PRE_FedoraServer_pre)

trace id 16fcf855 inet firewalld mangle_PRE_FedoraServer rule jump mangle_PRE_FedoraServer_log (verdict jump mangle_PRE_FedoraServer_log)

trace id 16fcf855 inet firewalld mangle_PRE_FedoraServer rule jump mangle_PRE_FedoraServer_deny (verdict jump mangle_PRE_FedoraServer_deny)

trace id 16fcf855 inet firewalld mangle_PRE_FedoraServer rule jump mangle_PRE_FedoraServer_allow (verdict jump mangle_PRE_FedoraServer_allow)

trace id 16fcf855 inet firewalld mangle_PRE_FedoraServer rule jump mangle_PRE_FedoraServer_post (verdict jump mangle_PRE_FedoraServer_post)

trace id 16fcf855 inet firewalld mangle_PREROUTING_POLICIES rule iifname "eth0" return (verdict return)

trace id 16fcf855 inet firewalld mangle_PREROUTING policy accept

trace id 16fcf855 ip nat PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 ip nat PREROUTING rule iifname "eth0" tcp dport 9999 counter packets 34 bytes 1768 log prefix "iptables nat PREROUTING SEEN" (verdict continue)

trace id 16fcf855 ip nat PREROUTING policy accept

trace id 16fcf855 inet firewalld nat_PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip protocol tcp ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 inet firewalld nat_PREROUTING rule jump nat_PREROUTING_POLICIES (verdict jump nat_PREROUTING_POLICIES)

trace id 16fcf855 inet firewalld nat_PREROUTING_POLICIES rule iifname "eth0" jump nat_PRE_policy_allow-host-ipv6 (verdict jump nat_PRE_policy_allow-host-ipv6)

trace id 16fcf855 inet firewalld nat_PRE_policy_allow-host-ipv6 rule jump nat_PRE_policy_allow-host-ipv6_pre (verdict jump nat_PRE_policy_allow-host-ipv6_pre)

trace id 16fcf855 inet firewalld nat_PRE_policy_allow-host-ipv6 rule jump nat_PRE_policy_allow-host-ipv6_log (verdict jump nat_PRE_policy_allow-host-ipv6_log)

trace id 16fcf855 inet firewalld nat_PRE_policy_allow-host-ipv6 rule jump nat_PRE_policy_allow-host-ipv6_deny (verdict jump nat_PRE_policy_allow-host-ipv6_deny)

trace id 16fcf855 inet firewalld nat_PRE_policy_allow-host-ipv6 rule jump nat_PRE_policy_allow-host-ipv6_allow (verdict jump nat_PRE_policy_allow-host-ipv6_allow)

trace id 16fcf855 inet firewalld nat_PRE_policy_allow-host-ipv6 rule jump nat_PRE_policy_allow-host-ipv6_post (verdict jump nat_PRE_policy_allow-host-ipv6_post)

trace id 16fcf855 inet firewalld nat_PREROUTING_POLICIES rule iifname "eth0" jump nat_PRE_FedoraServer (verdict jump nat_PRE_FedoraServer)

trace id 16fcf855 inet firewalld nat_PRE_FedoraServer rule jump nat_PRE_FedoraServer_pre (verdict jump nat_PRE_FedoraServer_pre)

trace id 16fcf855 inet firewalld nat_PRE_FedoraServer rule jump nat_PRE_FedoraServer_log (verdict jump nat_PRE_FedoraServer_log)

trace id 16fcf855 inet firewalld nat_PRE_FedoraServer rule jump nat_PRE_FedoraServer_deny (verdict jump nat_PRE_FedoraServer_deny)

trace id 16fcf855 inet firewalld nat_PRE_FedoraServer rule jump nat_PRE_FedoraServer_allow (verdict jump nat_PRE_FedoraServer_allow)

trace id 16fcf855 inet firewalld nat_PRE_FedoraServer rule jump nat_PRE_FedoraServer_post (verdict jump nat_PRE_FedoraServer_post)

trace id 16fcf855 inet firewalld nat_PREROUTING_POLICIES rule iifname "eth0" return (verdict return)

trace id 16fcf855 inet firewalld nat_PREROUTING policy accept

trace id 16fcf855 inet firewalld filter_PREROUTING packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip protocol tcp ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 inet firewalld filter_PREROUTING policy accept

trace id 16fcf855 ip mangle INPUT packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 ip mangle INPUT rule iifname "eth0" tcp dport 9999 counter packets 34 bytes 1768 log prefix "iptables mangle INPUT SEEN" (verdict continue)

trace id 16fcf855 ip mangle INPUT policy accept

trace id 16fcf855 ip filter INPUT packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 ip filter INPUT rule iifname "eth0" tcp dport 9999 counter packets 34 bytes 1768 log prefix "iptables filter INPUT SEEN" (verdict continue)

trace id 16fcf855 ip filter INPUT policy accept

trace id 16fcf855 inet firewalld filter_INPUT packet: iif "eth0" ether saddr 00:15:5d:12:6d:cc ether daddr 00:15:5d:00:01:04 ip saddr 172.24.128.1 ip daddr 172.24.133.231 ip dscp cs0 ip ecn not-ect ip ttl 128 ip id 12186 ip protocol tcp ip length 52 tcp sport 14512 tcp dport 9999 tcp flags == syn tcp window 64240

trace id 16fcf855 inet firewalld filter_INPUT rule jump filter_INPUT_POLICIES (verdict jump filter_INPUT_POLICIES)

trace id 16fcf855 inet firewalld filter_INPUT_POLICIES rule iifname "eth0" jump filter_IN_policy_allow-host-ipv6 (verdict jump filter_IN_policy_allow-host-ipv6)

trace id 16fcf855 inet firewalld filter_IN_policy_allow-host-ipv6 rule jump filter_IN_policy_allow-host-ipv6_pre (verdict jump filter_IN_policy_allow-host-ipv6_pre)

trace id 16fcf855 inet firewalld filter_IN_policy_allow-host-ipv6 rule jump filter_IN_policy_allow-host-ipv6_log (verdict jump filter_IN_policy_allow-host-ipv6_log)

trace id 16fcf855 inet firewalld filter_IN_policy_allow-host-ipv6 rule jump filter_IN_policy_allow-host-ipv6_deny (verdict jump filter_IN_policy_allow-host-ipv6_deny)

trace id 16fcf855 inet firewalld filter_IN_policy_allow-host-ipv6 rule jump filter_IN_policy_allow-host-ipv6_allow (verdict jump filter_IN_policy_allow-host-ipv6_allow)

trace id 16fcf855 inet firewalld filter_IN_policy_allow-host-ipv6 rule jump filter_IN_policy_allow-host-ipv6_post (verdict jump filter_IN_policy_allow-host-ipv6_post)

trace id 16fcf855 inet firewalld filter_INPUT_POLICIES rule iifname "eth0" jump filter_IN_FedoraServer (verdict jump filter_IN_FedoraServer)

trace id 16fcf855 inet firewalld filter_IN_FedoraServer rule jump filter_IN_FedoraServer_pre (verdict jump filter_IN_FedoraServer_pre)

trace id 16fcf855 inet firewalld filter_IN_FedoraServer rule jump filter_IN_FedoraServer_log (verdict jump filter_IN_FedoraServer_log)

trace id 16fcf855 inet firewalld filter_IN_FedoraServer rule jump filter_IN_FedoraServer_deny (verdict jump filter_IN_FedoraServer_deny)

trace id 16fcf855 inet firewalld filter_IN_FedoraServer rule jump filter_IN_FedoraServer_allow (verdict jump filter_IN_FedoraServer_allow)

trace id 16fcf855 inet firewalld filter_IN_FedoraServer rule jump filter_IN_FedoraServer_post (verdict jump filter_IN_FedoraServer_post)

trace id 16fcf855 inet firewalld filter_INPUT_POLICIES rule iifname "eth0" reject with icmpx admin-prohibited (verdict drop)

我们看到其在nftables中table: inet firewalld, chain: filter_INPUT_POLICIES中被丢包(同时,发了一个ICMP回包)

看到这里我猜想其centos/fedora系统中firewalld是默认启用的….,之前也遇到过很多这种没放通端口的防火墙问题

我们接下来这个看看这条规则具体怎么写的

root@localhost:~# nft list tables

table ip raw

table ip mangle

table ip nat

table ip filter

table inet firewalld

root@localhost:~# nft list table inet firewalld

输出太多了,我们只看和我们相关的

chain filter_INPUT {

type filter hook input priority filter + 10; policy accept;

ct state { established, related } accept

ct status dnat accept

iifname "lo" accept

ct state invalid drop

jump filter_INPUT_POLICIES

reject with icmpx admin-prohibited

}

chain filter_INPUT_POLICIES {

iifname "eth0" jump filter_IN_policy_allow-host-ipv6

iifname "eth0" jump filter_IN_FedoraServer

iifname "eth0" reject with icmpx admin-prohibited

jump filter_IN_policy_allow-host-ipv6

jump filter_IN_FedoraServer

reject with icmpx admin-prohibited

}

chain filter_IN_FedoraServer {

jump filter_IN_FedoraServer_pre

jump filter_IN_FedoraServer_log

jump filter_IN_FedoraServer_deny

jump filter_IN_FedoraServer_allow

jump filter_IN_FedoraServer_post

meta l4proto { icmp, ipv6-icmp } accept

}

chain filter_IN_FedoraServer_pre {

}

chain filter_IN_FedoraServer_log {

}

chain filter_IN_FedoraServer_deny {

}

chain filter_IN_FedoraServer_allow {

tcp dport 22 accept

ip6 daddr fe80::/64 udp dport 546 accept

tcp dport 9090 accept

}

chain filter_IN_FedoraServer_post {

}

我们看下这个流程是

filter_INPUT(连接未建立)

filter_INPUT_POLICIES

filter_IN_policy_allow-host-ipv6(忽略,我们是ipv4)

filter_IN_FedoraServer

filter_IN_FedoraServer_pre

filter_IN_FedoraServer_log

filter_IN_FedoraServer_deny

filter_IN_FedoraServer_allow(未命中)

tcp dport 22 accept

ip6 daddr fe80::/64 udp dport 546 accept

tcp dport 9090 accept

filter_IN_FedoraServer_post

meta l4proto { icmp, ipv6-icmp } accept(这里解释了为什么能Ping通)

iifname "eth0" reject with icmpx admin-prohibited(没有命中accpet,那在这里就会被丢包并回复icmp)

好的,现在,我们找到了问题的根因。可以通过自己放通端口来解决这个问题,我因为是测试环境,我就把firewalld停了再试下。

#(停firewalld前)

PS C:\Users\14190> curl -v http://172.24.133.231:9999

VERBOSE: GET with 0-byte payload

curl : Unable to connect to the remote server

At line:1 char:1

+ curl -v http://172.24.133.231:9999

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebRequest], WebException

+ FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequestCommand

#(停firewalld后)

PS C:\Users\14190> curl -v http://172.24.133.231:9999

VERBOSE: GET with 0-byte payload

VERBOSE: received 1328-byte response of content type text/html; charset=utf-8

StatusCode : 200

StatusDescription : OK

Content : <!DOCTYPE HTML>

<html lang="en">

<head>

<meta charset="utf-8">

<title>Directory listing for /</title>

</head>

<body>

回顾这个问题的解决流程,我们是如何一步步定位查到根因并解决的

流程回顾

windows host ping vm(可以通)

windows host ssh vm port 22(可以通)

windows host curl vm port 9999(自部署服务,windows host端未收到响应…)

- 检查port 9999服务是否正常,排除服务本身的问题

通过在本机访问,可以访问,正常。以及将服务换成nginx后问题还是一样的

-

证明服务本身没问题后,那问题就是windows host —- vm之间的网络问题了,我们抓包看下包是否有到达vm

tcpdump -i any -s0 -nnn -vvv port 9999 -w server.pcap通过tcpdump,我们发现了包确实到了vm,只是vm没有回包

-

接下来我们确认应用层是否接收到了包,还是在内核层就已经丢包了

如何判断应用层是否接受到了包,可以添加日志。但是本着不能修改应用的前提,我们在收、回、转发包方向增加了日志

iptables --table raw -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables raw PREROUTING SEEN" iptables --table mangle -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle PREROUTING SEEN" iptables --table nat -A PREROUTING -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables nat PREROUTING SEEN" # local input iptables --table mangle -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle INPUT SEEN" iptables --table nat -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables nat INPUT SEEN" iptables --table filter -A INPUT -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables filter INPUT SEEN" # forward iptables --table mangle -A FORWARD -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables mangle FORWARD SEEN" iptables --table filter -A FORWARD -i eth0 -p tcp --dport 9999 -j LOG --log-prefix "iptables filter FORWARD SEEN" #output iptables --table filter -A OUTPUT -p tcp --sport 9999 -j LOG --log-prefix "iptables filter OUTPUT SEEN"root@localhost:~# dmesg -e -woutput、forward没有读到日志,说明应用层没有回包和转发。filter input读到了日志,而我们的应用层不会主动丢包,说明是内核处理的过程中丢包了

-

接下来我们看下,我们通过监听内核事件看在哪里丢包的。

这里有几种方式,

-

root@localhost:~# perf record -e skb:kfree_skb && perf script -

root@localhost:~# bpftrace -e 'tracepoint:skb:kfree_skb {printf("%s: %d\n", comm, args->reason)}' -

root@localhost:~## dropwatch -lkas Initializing kallsyms db dropwatch> set alertmode packet Setting alert mode Alert mode successfully set dropwatch> start

这几种方式最后都会输出类似这样的结果,我们选择的方式三

drop at: nft_do_chain+0x40c/0x620 [nf_tables] (0xffffffffc0a514fc) origin: software input port ifindex: 2 timestamp: Sun Apr 21 10:19:48 2024 990406165 nsec protocol: 0x800 length: 66 original length: 66 drop reason: NETFILTER_DROP -

- 通过监听内核

kfree_skb,我们发现是netfilter框架丢包。常见基于netfilter做防火墙的是iptables,nftables。我们之前在iptables中加了对应的hook点,没有发现异常点。这次我们看下nftables

root@localhost:/boot# nft add rule ip raw PREROUTING tcp dport 9999 meta nftrace set 1基于此我们查到了丢包日志,以及导致丢包的规则。

-

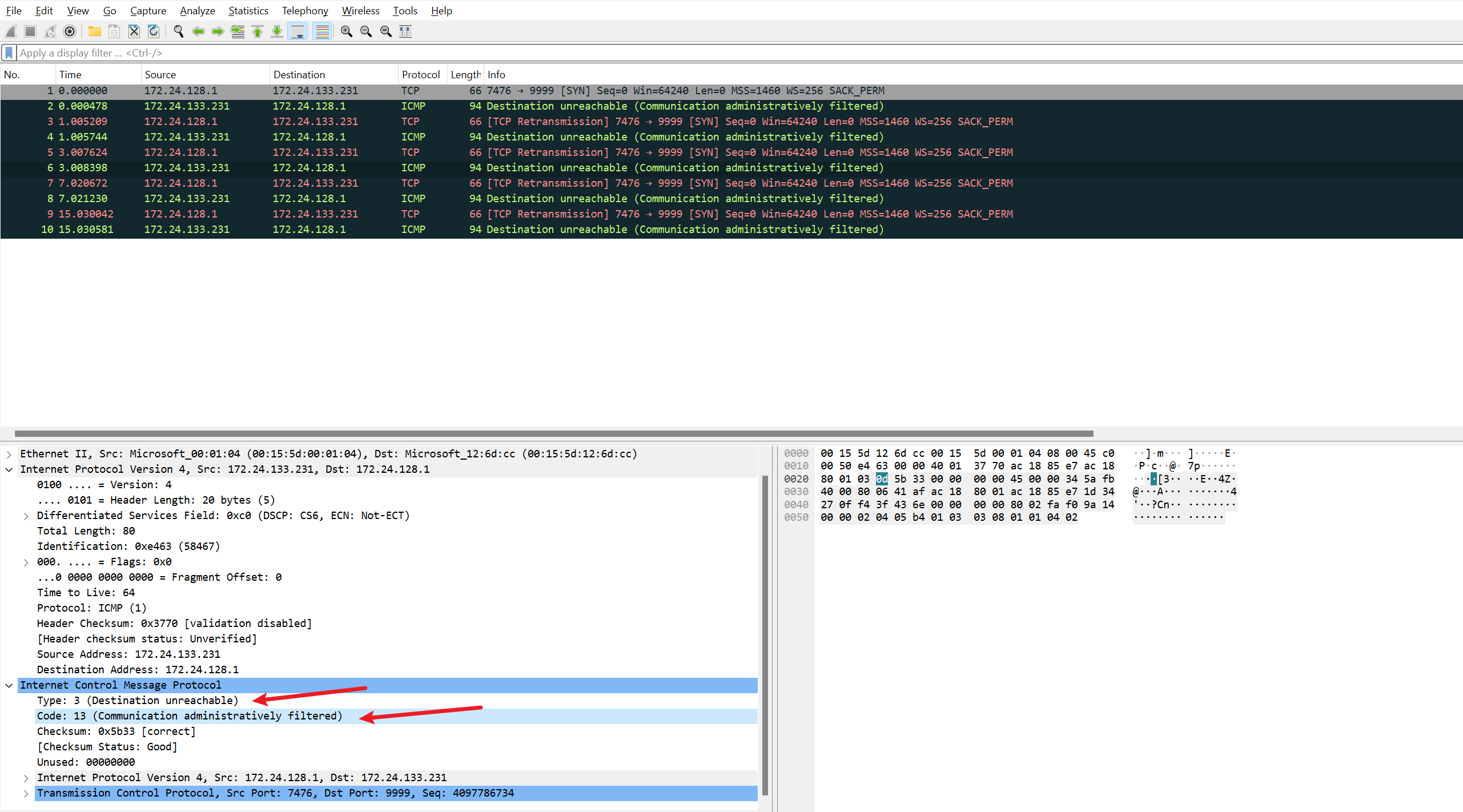

这时我们发现,其实nftables规则拦截该包后,还回了一条icmp包,但因为我们的tcpdump抓包参数设置的是

tcpdump -i any -s0 -nnn -vvv port 9999 -w server.pcap基于端口过滤的,导致并没有抓到包。调整下抓包参数,并过滤无关端口的包

tcpdump -tt -i eth0 -s0 -nnn -vvv host 172.24.133.231 and host 172.24.128.1 and not port 22

- As per RFC 1812, code 13 = Communication Administratively Prohibited – generated if a router cannot forward a packet due to administrative filtering

- Issue is on remote device sending this ICMP packet with error core 13

这通常说明是被主动丢包了,需要考虑下防火墙的因素了。